Word embeddings

From the TensorFlow documentation word embeddings documentation

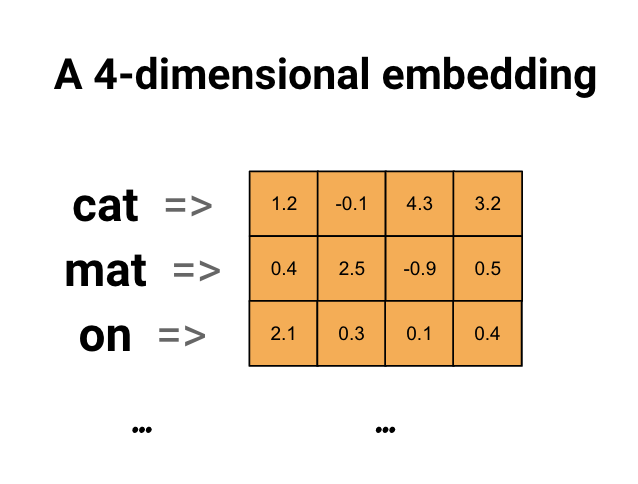

Word embeddings give us a way to use an efficient, dense representation in which similar words have a similar encoding.

Importantly, you do not have to specify this encoding by hand. An embedding is a dense vector of floating point values (the length of the vector is a parameter you specify).

Instead of specifying the values for the embedding manually, they are trainable parameters (weights learned by the model during training, in the same way a model learns weights for a dense layer).

It is common to see word embeddings that are 8-dimensional (for small datasets), up to 1024-dimensions when working with large datasets. A higher dimensional embedding can capture fine-grained relationships between words, but takes more data to learn.

Let us explore the word embedding with some examples. We will use spacy for demonstration.

import numpy as np

import spacy

from sklearn.metrics.pairwise import cosine_similarity

# Need to load the large model to get the vectors

nlp = spacy.load('en_core_web_lg')

nlp("queen").vector.shape

We find the word embedding of a single word queen and find that we have a vector with 1 row and 300 columns. Therefore a single word is converted to 300 numerical values.

We find the similarity between the words using cosine similarity

cosine_similarity([nlp("queen").vector],[nlp("king").vector])

0.725261

cosine_similarity([nlp("queen").vector],[nlp("mother").vector])

0.44720313

cosine_similarity([nlp("queen").vector],[nlp("princess").vector])

0.6578181

We observe that the similarity between queen and king is the highest , followed by princess and mother

We will see that how we can use the similarity between sentences

x1 = nlp("I am a software consultant").vector

x2 = nlp("Hey ,me data guy").vector

x3 = nlp("Hey ,me plumber").vector

x1.shape , x2.shape , x3.shape

((300,), (300,), (300,))

We find that the shape of the sentence vectors are also 1 x 300. The individual words also have shape 1 x 300 . But for a sentence , we average the vectors so as to get the shape also as 1 x 300.

cosine_similarity([nlp("x1").vector],[nlp("x2").vector])

0.7383951

cosine_similarity([nlp("x1").vector],[nlp("x3").vector])

0.64217263

We see that the similarity between the senctence with software consultant and data guy is higher than the sentence with software consultant and plumber